Western world

Western world has meant various things at various times. In the earliest history of Europe, it referred to Ancient Greece and the Aegean. During the Roman Empire, it meant the Western Roman Empire (covering the area from Croatia to Britain). At other times, it has meant Western Europe or Europe or Christendom. During and after the Cold War, it sometimes meant the democratic countries or those allied with the various NATO powers.

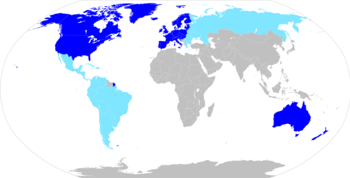

In this day and age, it often refers to the places that have a European cultural heritage, called Western civilization. This European heritage itself is a combination of Judeo-Christian ethic, classical Greco-Roman thinking and the cultural practices of the "barbarian" peoples of Northern Europe. Under the strictest definition, it would be the United States, Canada, Western Europe, Australia, and New Zealand.

At its widest medieval definition, it includes the following 37 countries:

Andorra

Andorra Australia

Australia Austria

Austria Belgium

Belgium Canada

Canada Croatia

Croatia Czech Republic

Czech Republic Denmark

Denmark Estonia

Estonia Finland

Finland France

France Germany

Germany Greece

Greece Hungary

Hungary Iceland

Iceland Ireland

Ireland Italy

Italy Latvia

Latvia Liechtenstein

Liechtenstein Lithuania

Lithuania Luxembourg

Luxembourg Monaco

Monaco Netherlands

Netherlands New Zealand

New Zealand Norway

Norway Portugal

Portugal Poland

Poland San Marino

San Marino Slovakia

Slovakia Slovenia

Slovenia Spain

Spain Sweden

Sweden Switzerland

Switzerland United Kingdom

United Kingdom United States

United States Vatican City

Vatican City